Background on the 2021-2023 Data Protection Survey

The data protection survey was conducted from 2021 onwards. Responses to the survey were solicited via email and social media.

As in modern world, business is under constant change and outsourcing is new black, it is challenging to analyse risk status reliably. Data should be available in all situations – also during exceptional times – as demanded by business, and by compliance rules like NIS-D and GDPR. For business, data availability and infrastructure are keys for success.

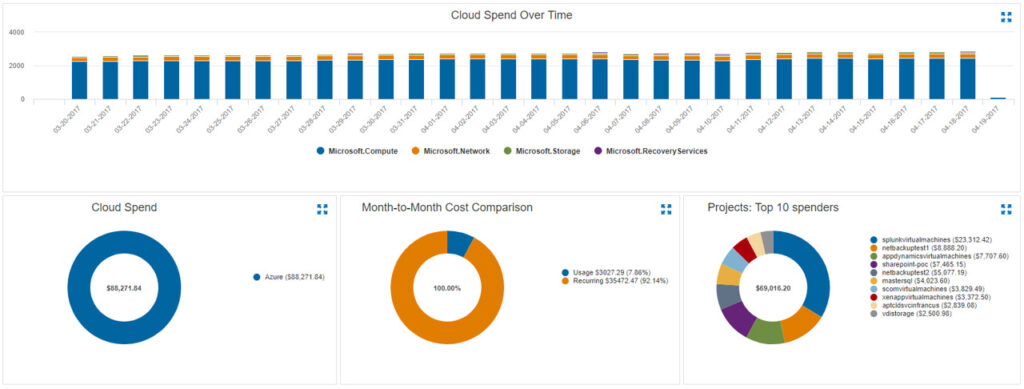

In 2020 it is common to have infrastructure solutions from on-premises, service providers and cloud sources. These combinations can easily establish an environment which is difficult to observe as whole. This is a concern not only for costs, but also for data availability and business continuity. Happy is the CIO or CTO, who is in charge of services and hardware provided by one or two companies. With booming business, a growing number of providers and environments is the rule.

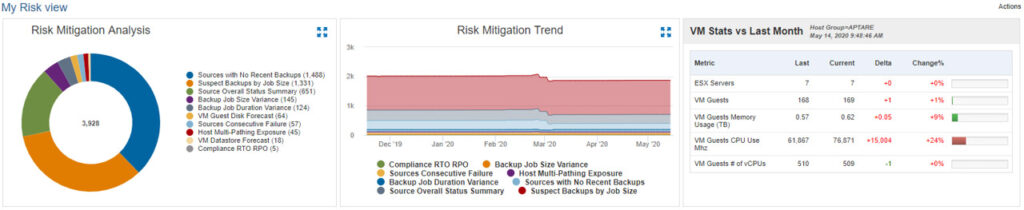

Dashboard view? Is all this needed anymore? Which version are we running? Are all versions up-to-date or on planned level? Are all servers under backup routines? Is our possible restore under recovery time objective (RTO), which is set by that critical business unit? Is there a growing number of new virtual services and servers – and are they at all under control or even under backup?

These should be routine checks and therefore hardly any problems at all. Still, headlines “Organisation down and hackers took the environment down – no backups to restore operations” pop up in media. If there is no control of the whole, fundamental risks can come true.

Therefore, risk mitigation trends and mitigation analysis should be key visuals for those in charge.

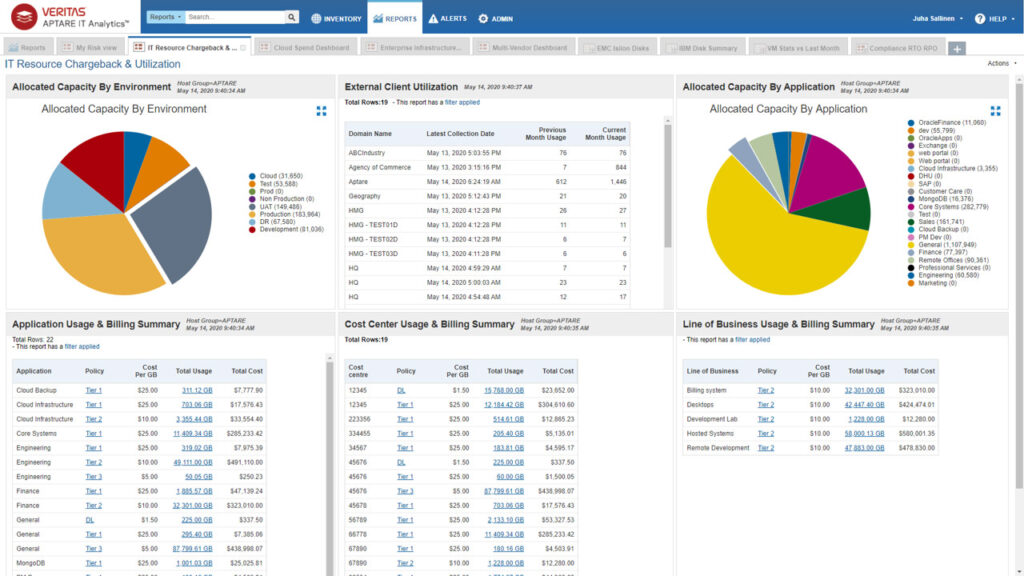

For organisations operating internationally, the current status-quo is capacity scattered and under-used in many platforms. Scattered, under-used but definitely overpaid.

Compliance, directives, regulations and standards (like PCI-DSS, NIS-D, GDPR) often demand data availability. Naturally and typically this is a demand by business unit too.

Cloud, no-cloud or hybrid-cloud overview dashboard to all environments?

With this kind of tool, it is easier to answer to those difficult questions: Are there risks that have not been traced? Is all capacity under control? Is there an environment that is a historical leftover? (You know those “use this only during the migration” or ”temporary”. We know.)

Reports presented in this blog are consistent and standardised even though the data sources and vendors are not. These reports are automatically created with a tool, which generates correlation over the set of products. All data is imported from sources without installed agents and securely, generating correlations and corrective actions for your usage.

With this tool it is easy to drill-down deeper, e.g. to a very technical level or go to higher cost or charge-back level.

The tool is Veritas APTARE IT analytics. We use this tool also for infrastructure assessments, which analyse an adequate number of infrastructure core components. Based on this data, corrective actions and risk mitigation plans are created for you to support your decision making. Ask more or schedule a demo.

In the blog you will find current information, interesting articles and a lot of detailed information related to data protection.

The data protection survey was conducted from 2021 onwards. Responses to the survey were solicited via email and social media.

Organisations are implementing data protection work and complying with the GDPR, the EU’s General Data Protection Regulation, to protect personal

Recently, in a webinar panel discussion moderated by Juha Sallinen, the colossal data breach at Pegasus Airlines in 2022 came

Get GDRP tips, releases and events straight to your email!

+358 40 5666 900

sales@gdprtech.com

Weekdays at 8–17

Puolikkotie 8, 5th floor, 02230 Espoo